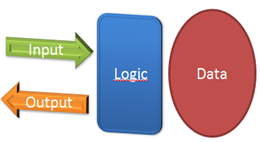

IO, logic and data

In essence all computer systems are about I/O, data is send against the system and data is sent back.

In the simplest form it looks like this, note that there is no logic involved. Raw data is just passed as input, to change the data and then the changed data is sent back out.

I would guess that most systems in the world takes this approach, think about the countless spreadsheets laying around with absolutely no logic.

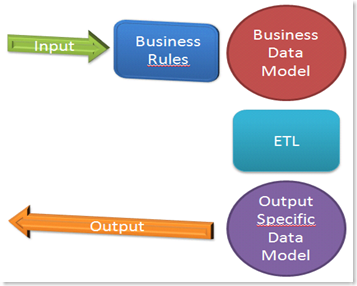

When logic is introduced I usually see the following layer of logic introduced.

The logic now has 2 distinct responsibilities.

- All input is validated against the business rules, before changes to the data.

- Exposure and transformation of the data to a specific client.

These 2 responsibilities are very different. When the data store is optimized for one, the other suffers. If a relational database is used as data store, the data schema can be normalize to make input commands more effective, and help keep our data consistent. But a normalized data store is not good for the output to the client, because it often need data from multiple tables. Either a lot of joins are used, or when using Active Record, DDD entities or the like, whole table rows are loaded into ram, even if only a few fields are needed from each table.

De-normalize the data schema on the other hand, makes input commands tricky because multiple versions of the truth can be scattered all over the database. This quickly ends up with inconsistent data.

The logic also suffers from a asymmetry in how code is designed. Business rules are usually object centric, while client transformation is data centric. This creates an object <-> data structure asymmetry, where the code is neither good object or good data structures. Objects want to encapsulate data and operate on it, while data structures want to expose data and want others to operate on it. Both are needed and have valid reasons to be in a system, objects are good at adding data without breaking their contract, while data structures are good at adding new methods without breaking their contract. But hybrids break their contract, when both data and methods are added, so they are good at neither.

Introducing multiple models

A possible solution could look like this.

The logic is split up, business rules are applied before the input is saved to the business data model, and an ETL is used to transform the business data to a specific data model. There could be multiple output specific models (for views, reports and service results) and multiple clients(Web app, Service etc.) using them.

So en essence there is a model with logic optimized for changes, and a model with transformations optimized for reads. The general performance of the system should be better, at the cost of using more disk space.

If you think about it, data is transformed to other models already. BI transforms the data into aggregate structures and Full Text Search transforms data into indexes. The new part is to transform relational data optimized for change, into relational data optimized for reads. Also this has radical influence on the code design, because the business rules no longer get polluted with data transformation and data structure behavior.

No comments:

Post a Comment