Runtime diagnostics

There are 3 major ways to diagnose code at runtime.

I think most .NET developers use the debugger everyday in Visual Studio, and Automated tests (actually mostly unit tests) get a lot of blog space. But interestingly logging i not mentioned often? In some scenarios on way is more useful than the 2 other, and some times it does not matter which one is used. But all 3 ways have distinct advantages that can be used, to ease our busy developer lives.

Advantages

If your application has crashed, It does not matter how many unit tests or how good you are with the debugger. A good log will help you identify the problem.

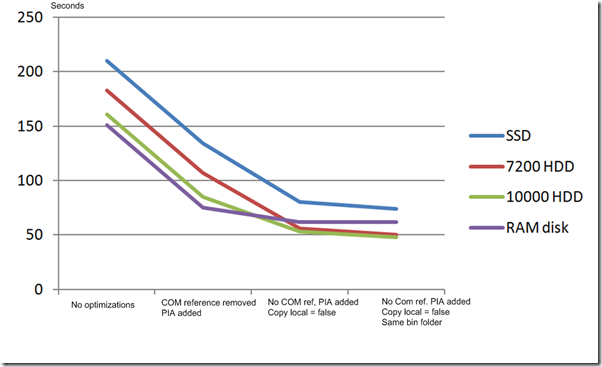

In a live application the performance degradation(amount of log messages) can be controlled, by setting a log level. The application does not even have to be restarted. Unit tests does not do much with live applications and usually it is not wise to attach a debugger to a live application.

Statistics can be gathered from logs, like key performance indicators, number of exceptions etc. WMI can also be used, but that is actually just an other kind of logging (internal vs external).

Logs (often log files) act as an alternative UI, administrators/supporters etc. can read logs and determine out of process usage of the application.

If there is a good log there is no reason to call the user and start a “shared screen” so you can see what he does.

Messages

Don't just output values, describe what the values mean. When reading the log, you don't have the source code to help you - only the previous and next message(s).

Logging as a global error handler

As far as I can tell this is where most applications have some form of logging. The implementation looks differently depending on the type application.

In a WinForms app it looks something like this.

And in an ASP.NET app it could looks like this.

However this at the application boundary, it should be the only place in your code where the type Exception is caught. All uncaught exceptions should be allowed to bubble up to the global error handler and properly logged.

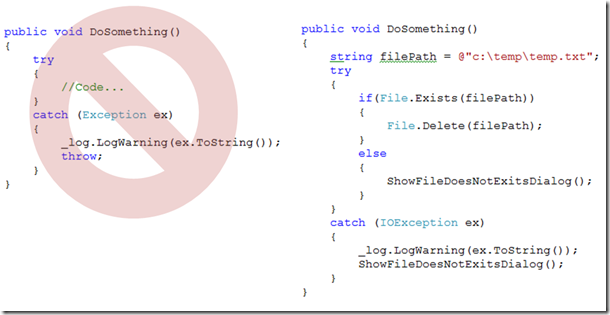

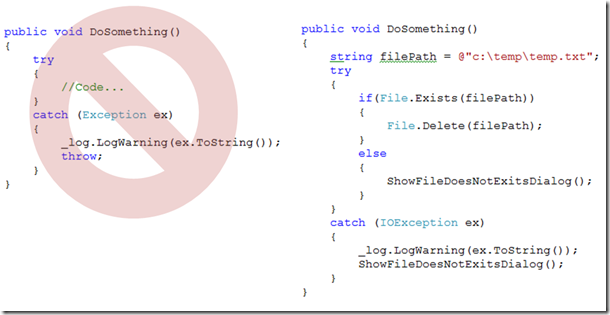

Logging is not error handling for the user

Don't log an exception just to re-throw the exception. But if you actually catch an specific exception type, always log the exception. The fact that the exception was caught could come in handy when diagnosing a problem with the application.

Decision Points

Are where the code branch, like if, switch and the like.

Don’t log inside a decision point, and don’t log where there is no decision point. Also don't log values that are not used for the decision.

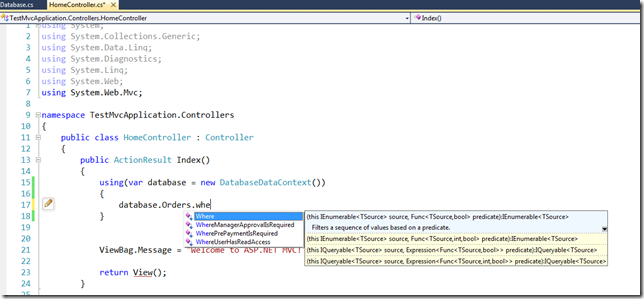

Do log before a decision point and do log the value(s) that are used to determine what branch to use. Do log all the values in a single formatted message. There are more decision points than “if”’s, logical operators also act as a decision point.

Things to log even though it is not a decision point

When the application uses out of process resources (File system, starts a process etc.), Do log the resource used.

This could help an administrator understand how the application works.

Another thing to always log is the use of DateTime.Now

DateTime.Now problems are often elusive and hard to replicate in a debugging environment, having the exact value help to understand under what conditions a problem can occur.

Point of indirection (like events) where there is no clear path to the calling code. It can be helpful to see what events get called and in what order.

Assertion

Don’t use logging for assertion, a manual test with the debugger or a repeatable automated test are better suited for this.

Message Context

Every log message should have context values that determines this usage from others. Often things like:

- Timestamp

- Level (debug, trace, info, warning, error, fatal)

- Thread

- Principal (username)

- Url (for web apps)

- Request hashcode (for web apps)

- Session hashcode (for web apps)

Using these message contexts, it should be easy to grab interrelated messages for analysis.

Conclusion

Don't neglect logging just because your more familiar with the debugger or you and your agile team has the code base covered with automated tests. In some scenarios logging is a vital view into your running application.

Recommended Reading

http://nirajrules.wordpress.com/2008/06/14/blogging-best-practicies/

http://www.kdgregory.com/index.php?page=java.logging

http://esmithy.net/2004/11/09/effective-logging/

http://www.ibm.com/developerworks/java/library/j-logging/